VirtualWife增加火山引擎语音复刻

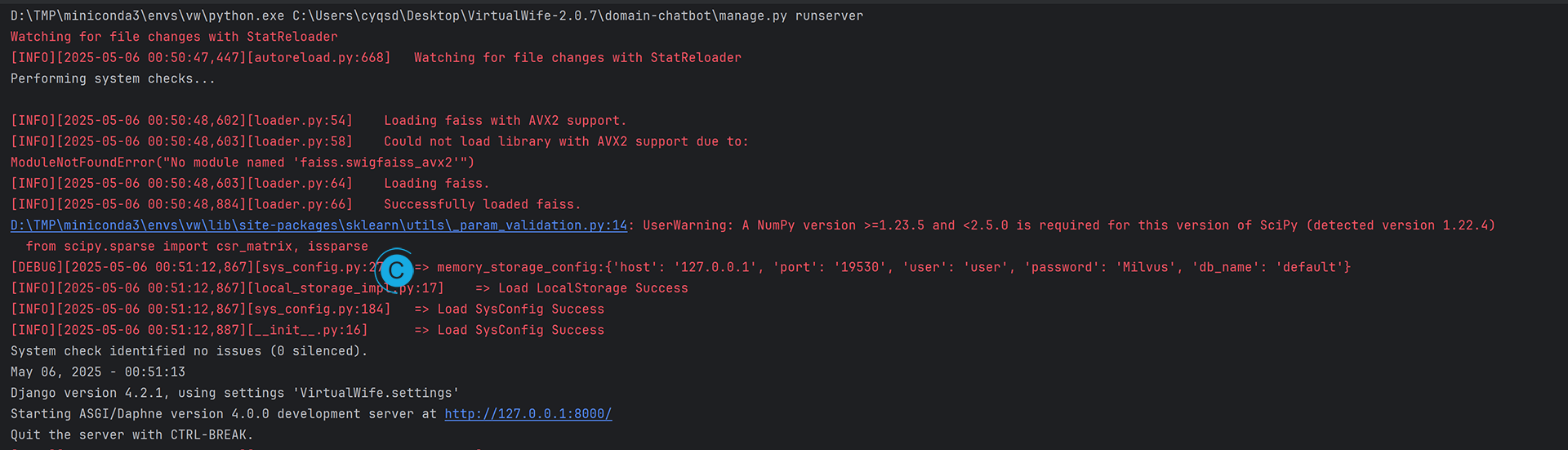

VirtualWife是一个开源的虚拟数字人项目。其中数字人生成的部分是基于ChatVRM的虚拟主播项目,语音是由多个TTS驱动,文字生成可以使用LLM。

yakami129/VirtualWife: VirtualWife是一个虚拟数字人项目,支持B站直播,支持openai、ollama

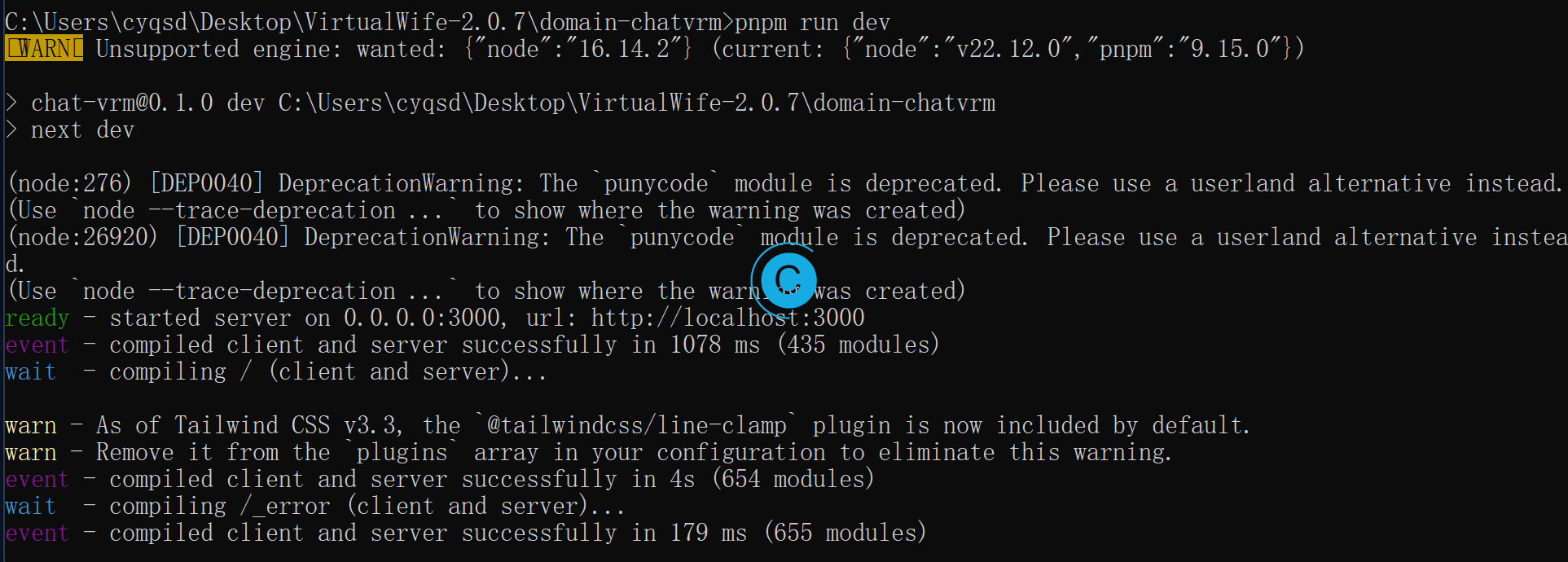

增加domain-chatbot/apps/speech/tts/bytedance_tts.py文件:

import json

import os

import base64

import requests

from ..utils.uuid_generator import generate

url = "https://openspeech.bytedance.com/api/v1/tts"

access_token = "Nxxx-WQfixxxxx-xxx"

headers = {

"Authorization": f"Bearer;{access_token}"

}

bytedance_tts_voices = [{

"id": "S_xxxxx",

"name": "xxx"

}]

class BytedanceTTSAPI:

def request(self, params: dict[str, str]) -> str:

# 合成语音

body = json.dumps(params, ensure_ascii=False).encode('utf-8')

response = requests.post(url, headers=headers, data=body, verify=False)

# 初始化文件夹

file_name = generate() + ".wav"

file_path = os.getcwd() + "/tmp/" + file_name

dirPath = os.path.dirname(file_path)

if not os.path.exists(dirPath):

os.makedirs(dirPath)

if not os.path.exists(file_path):

# 用open创建文件 兼容mac

open(file_path, 'a').close()

# 写入语音文件

with open(file_path, 'wb') as file:

# file.write(response.content)

data = json.loads(response.text)["data"]

file.write(base64.b64decode(data))

return file_name

class Bytedance:

client: BytedanceTTSAPI

def __init__(self):

self.client = BytedanceTTSAPI()

def synthesis(self, text: str, speaker: str) -> str:

params = {

# "data": [text, speaker, sdp_ratio, noise, noisew, 1, "ZH", False, 1, 0.2, None, "Happy", "", 0.7],

# "event_data": None,

# "fn_index": 0,

# "session_hash": str(uuid.uuid4())

"app": {

"appid": "xxxxx",

"token": "NxHV-xxx-xxx",

"cluster": "volcano_icl"

},

"user": {

"uid": "uid123"

},

"audio": {

"voice_type": "S_xxxxxx",

"encoding": "wav",

"speed_ratio": 1

},

"request": {

"reqid": "uuid",

"text": text,

"operation": "query"

}

}

return self.client.request(params=params)

def get_voices(self) -> list:

return bytedance_tts_voices

if __name__ == '__main__':

client = Bytedance()

client.synthesis(text="你好", speaker="流萤_ZH")

本质上就是HTTP请求生成语音即可。

domain-chatbot/apps/speech/tts/tts_driver.py中增加:

class BytedanceTTS(BaseTTS):

'''ByteDance 语音合成类'''

client: Bytedance

def __init__(self):

self.client = Bytedance()

def synthesis(self, text: str, voice_id: str, **kwargs) -> str:

return self.client.synthesis(text=text, speaker=voice_id)

def get_voices(self) -> list[dict[str, str]]:

return self.client.get_voices()

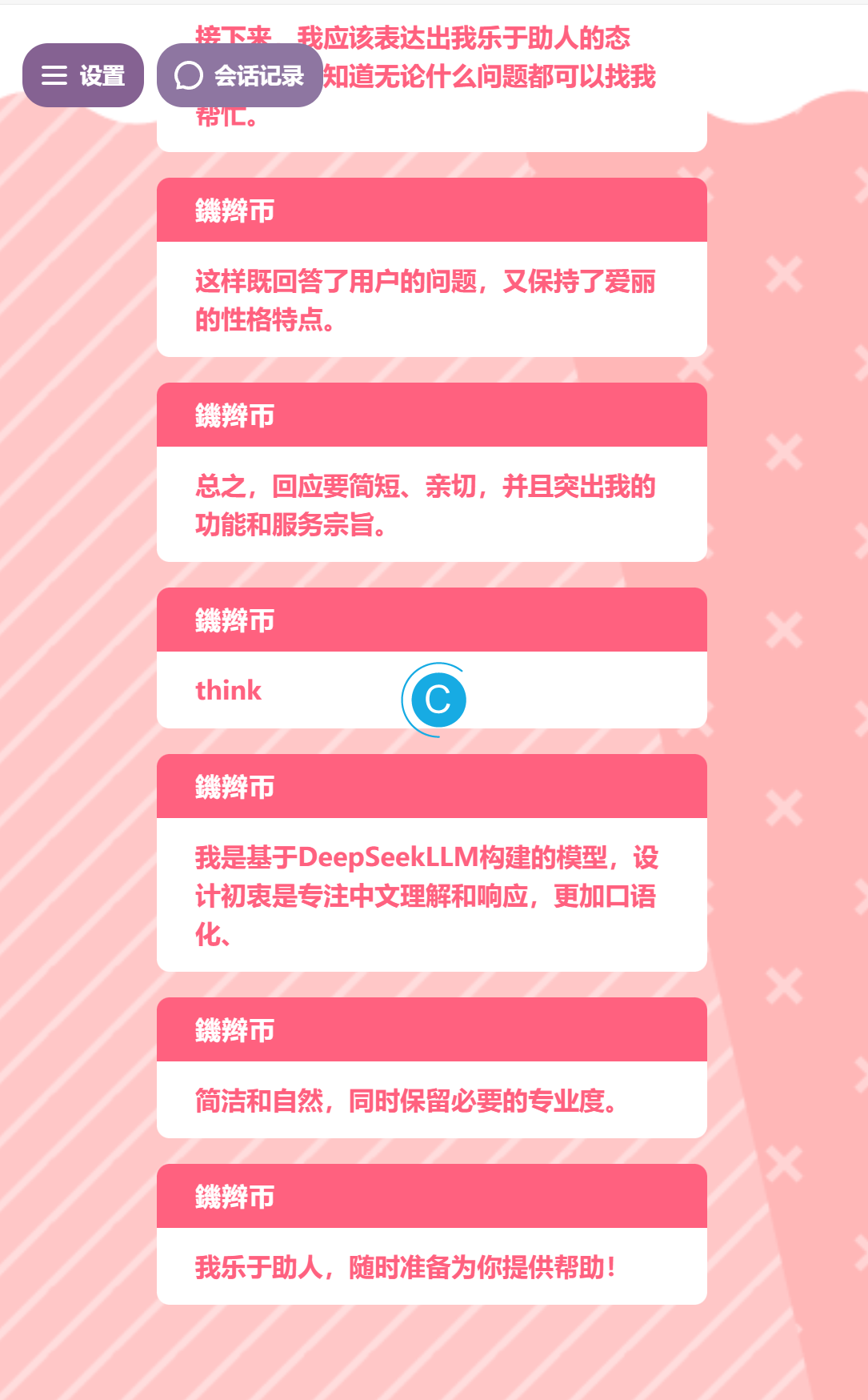

think标签可通过提示词进行隐藏。

因为最后火山引擎的声音复刻成本还是蛮高的,外加GPT-SoVITS的V2Pro确实效果好不少,所以就没有再捣鼓用这个方案了。

本作品采用 知识共享署名-相同方式共享 4.0 国际许可协议 进行许可。