llama.cpp将safetensors模型转换为gguf

llama.cpp提供了各种LLM的处理工具,其中convert_hf_to_gguf.py,就可以帮助我们将safetensors模型转换为gguf格式,其中,模型的大小不会改变,只是格式被修改了:

具体可以看官方项目:ggml-org/llama.cpp: LLM inference in C/C++

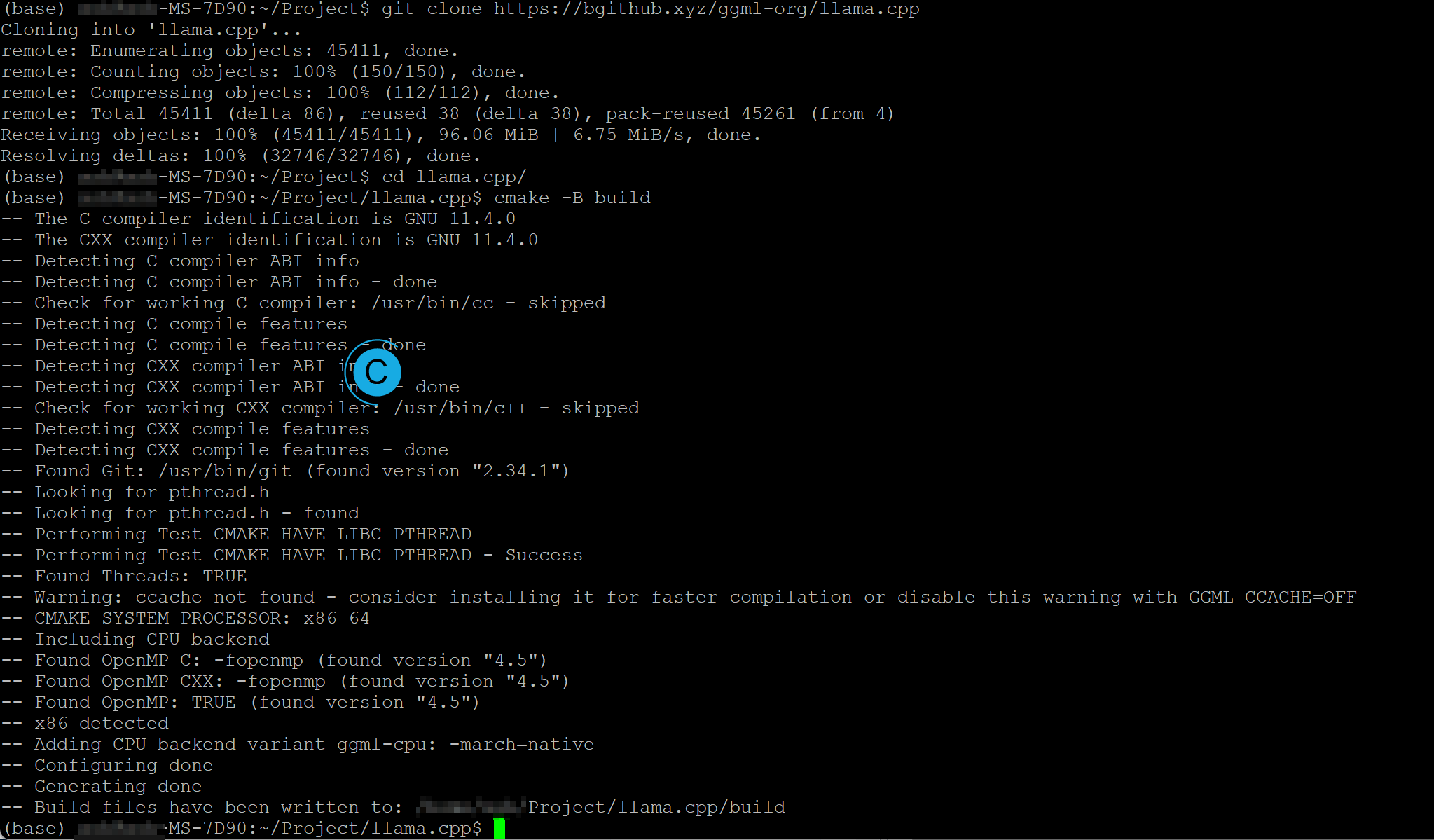

1.克隆官方项目

git clone https://github.com/ggerganov/llama.cpp.git2.安装项目依赖

# 推荐在conda下安装。

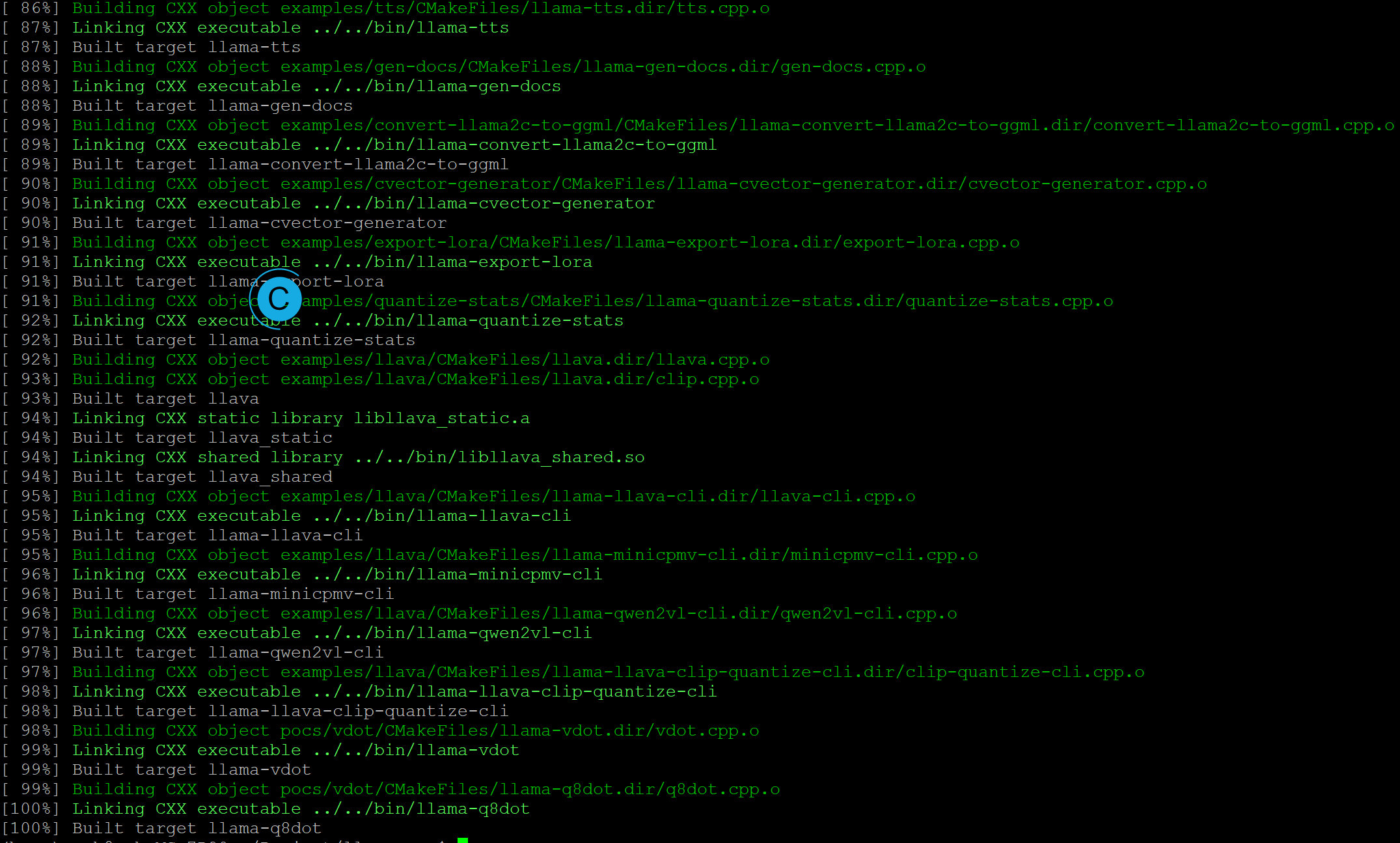

pip install -r requirements.txt官方推荐使用cmake进行编译,估计是为了跨平台移植方便,可以参考官方文档:llama.cpp/docs/build.md at master · ggml-org/llama.cpp。(如果是只使用gguf格式转换工具可不用此步骤)

cmake -B build

cmake --build build --config Release

(base) cyqsd@cyqsd-MS-7D90:~/Project/llama.cpp$ ls

AUTHORS CODEOWNERS docs include poetry.lock scripts

build common examples LICENSE prompts SECURITY.md

build-xcframework.sh CONTRIBUTING.md flake.lock Makefile pyproject.toml src

ci convert_hf_to_gguf.py flake.nix media pyrightconfig.json tests

cmake convert_hf_to_gguf_update.py ggml models README.md

CMakeLists.txt convert_llama_ggml_to_gguf.py gguf-py mypy.ini requirements

CMakePresets.json convert_lora_to_gguf.py grammars pocs requirements.txt3.转化gguf格式

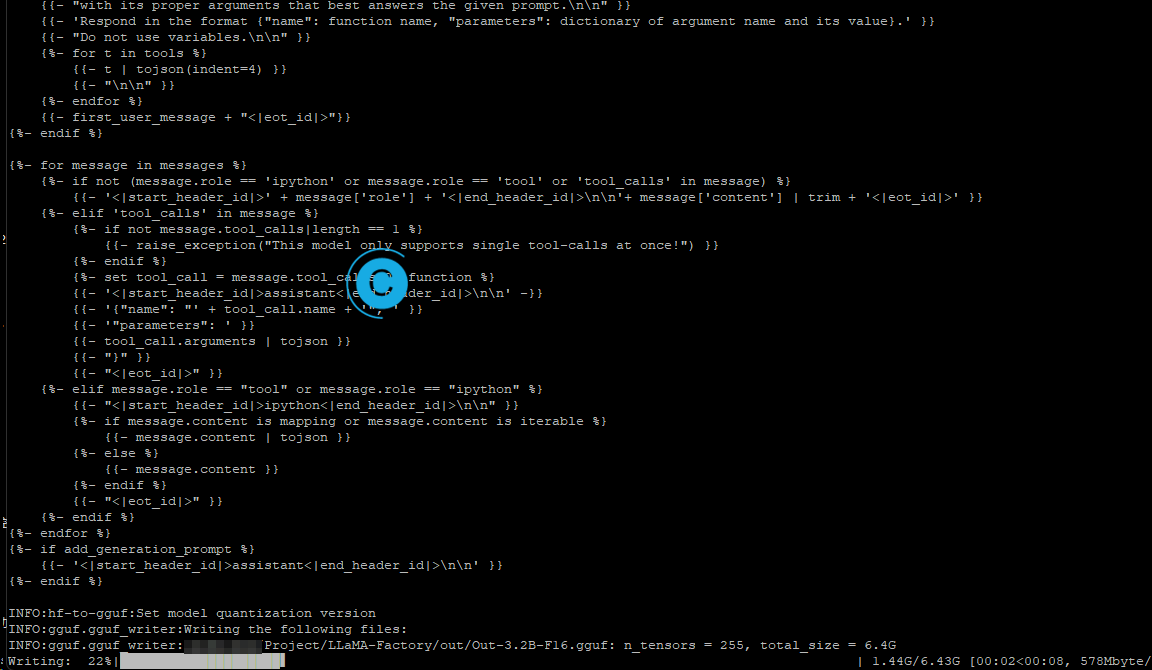

使用convert_hf_to_gguf.py进行转换,我的模型是用LLaMA-Factory微调的,直接指定导出的目录就行了。也可用--outfile指定输出文件名。

# 进行转换,后面的参数代表原本的模型本地地址

python convert_hf_to_gguf.py /home/cyqsd/Project/LLaMA-Factory/out会显示下文的写入中。。。我这里使用的3B模型,很小,转换也不需要很长时间。

直到显示成功。

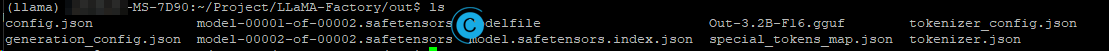

完成后源目录中,多了gguf文件,这就是我们需要的。

4.无校准量化GGUF

使用项目目录中的llama-quantize命令即可,要是要求不高,也不太考虑准确性可以使用,如果有更高要求,可参考:llama.cpp - Qwen,使用其他量化方法。

(base) cyqsd@cyqsd-MS-7D90:~/Project/llama.cpp/build/bin$ ./llama-quantize

usage: ./llama-quantize [--help] [--allow-requantize] [--leave-output-tensor] [--pure] [--imatrix] [--include-weights] [--exclude-weights] [--output-tensor-type] [--token-embedding-type] [--override-kv] model-f32.gguf [model-quant.gguf] type [nthreads]

--allow-requantize: Allows requantizing tensors that have already been quantized. Warning: This can severely reduce quality compared to quantizing from 16bit or 32bit

--leave-output-tensor: Will leave output.weight un(re)quantized. Increases model size but may also increase quality, especially when requantizing

--pure: Disable k-quant mixtures and quantize all tensors to the same type

--imatrix file_name: use data in file_name as importance matrix for quant optimizations

--include-weights tensor_name: use importance matrix for this/these tensor(s)

--exclude-weights tensor_name: use importance matrix for this/these tensor(s)

--output-tensor-type ggml_type: use this ggml_type for the output.weight tensor

--token-embedding-type ggml_type: use this ggml_type for the token embeddings tensor

--keep-split: will generate quantized model in the same shards as input

--override-kv KEY=TYPE:VALUE

Advanced option to override model metadata by key in the quantized model. May be specified multiple times.

Note: --include-weights and --exclude-weights cannot be used together

Allowed quantization types:

2 or Q4_0 : 4.34G, +0.4685 ppl @ Llama-3-8B

3 or Q4_1 : 4.78G, +0.4511 ppl @ Llama-3-8B

8 or Q5_0 : 5.21G, +0.1316 ppl @ Llama-3-8B

9 or Q5_1 : 5.65G, +0.1062 ppl @ Llama-3-8B

19 or IQ2_XXS : 2.06 bpw quantization

20 or IQ2_XS : 2.31 bpw quantization

28 or IQ2_S : 2.5 bpw quantization

29 or IQ2_M : 2.7 bpw quantization

24 or IQ1_S : 1.56 bpw quantization

31 or IQ1_M : 1.75 bpw quantization

36 or TQ1_0 : 1.69 bpw ternarization

37 or TQ2_0 : 2.06 bpw ternarization

10 or Q2_K : 2.96G, +3.5199 ppl @ Llama-3-8B

21 or Q2_K_S : 2.96G, +3.1836 ppl @ Llama-3-8B

23 or IQ3_XXS : 3.06 bpw quantization

26 or IQ3_S : 3.44 bpw quantization

27 or IQ3_M : 3.66 bpw quantization mix

12 or Q3_K : alias for Q3_K_M

22 or IQ3_XS : 3.3 bpw quantization

11 or Q3_K_S : 3.41G, +1.6321 ppl @ Llama-3-8B

12 or Q3_K_M : 3.74G, +0.6569 ppl @ Llama-3-8B

13 or Q3_K_L : 4.03G, +0.5562 ppl @ Llama-3-8B

25 or IQ4_NL : 4.50 bpw non-linear quantization

30 or IQ4_XS : 4.25 bpw non-linear quantization

15 or Q4_K : alias for Q4_K_M

14 or Q4_K_S : 4.37G, +0.2689 ppl @ Llama-3-8B

15 or Q4_K_M : 4.58G, +0.1754 ppl @ Llama-3-8B

17 or Q5_K : alias for Q5_K_M

16 or Q5_K_S : 5.21G, +0.1049 ppl @ Llama-3-8B

17 or Q5_K_M : 5.33G, +0.0569 ppl @ Llama-3-8B

18 or Q6_K : 6.14G, +0.0217 ppl @ Llama-3-8B

7 or Q8_0 : 7.96G, +0.0026 ppl @ Llama-3-8B

1 or F16 : 14.00G, +0.0020 ppl @ Mistral-7B

32 or BF16 : 14.00G, -0.0050 ppl @ Mistral-7B

0 or F32 : 26.00G @ 7B

COPY : only copy tensors, no quantizing如需使用重要性矩阵量化,可使用llama-imatrix。

(base) cyqsd@cyqsd-MS-7D90:~/Project/llama.cpp/build/bin$ ./llama-imatrix --help

----- common params -----

-h, --help, --usage print usage and exit

--version show version and build info

--completion-bash print source-able bash completion script for llama.cpp

--verbose-prompt print a verbose prompt before generation (default: false)

-t, --threads N number of threads to use during generation (default: -1)

(env: LLAMA_ARG_THREADS)

-tb, --threads-batch N number of threads to use during batch and prompt processing (default:

same as --threads)

-C, --cpu-mask M CPU affinity mask: arbitrarily long hex. Complements cpu-range

(default: "")

-Cr, --cpu-range lo-hi range of CPUs for affinity. Complements --cpu-mask

--cpu-strict <0|1> use strict CPU placement (default: 0)

--prio N set process/thread priority : 0-normal, 1-medium, 2-high, 3-realtime

(default: 0)

--poll <0...100> use polling level to wait for work (0 - no polling, default: 50)

-Cb, --cpu-mask-batch M CPU affinity mask: arbitrarily long hex. Complements cpu-range-batch

(default: same as --cpu-mask)

-Crb, --cpu-range-batch lo-hi ranges of CPUs for affinity. Complements --cpu-mask-batch

--cpu-strict-batch <0|1> use strict CPU placement (default: same as --cpu-strict)

...后面的内容省略...本作品采用 知识共享署名-相同方式共享 4.0 国际许可协议 进行许可。