这代人的热情-HBase的简单使用

前面折腾了Hadoop,接着就是HBase了,但是这个要写的东西就可多可少了。本文只记录了简单的使用,没有额外写了。本文仅作为我的学习笔记,实际上发布到我的博客时,已经写了很久了只是一直没整理发出来,我加入了一些我自己的理解,便于我自己区分,错误是肯定存在,请留言联系我,我会第一时间改正。如果你想学HBase的使用倒不如直接去看官方文档Apache HBase ™ Reference Guide和HBase 官方文档中文版,比我写的内容多得多。

那么什么是HBase?

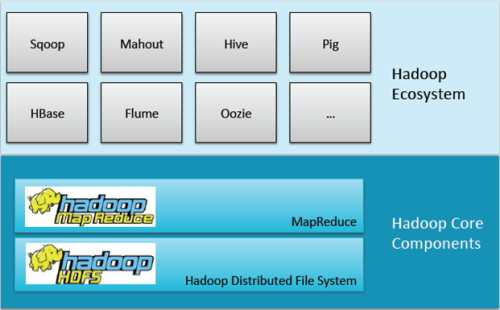

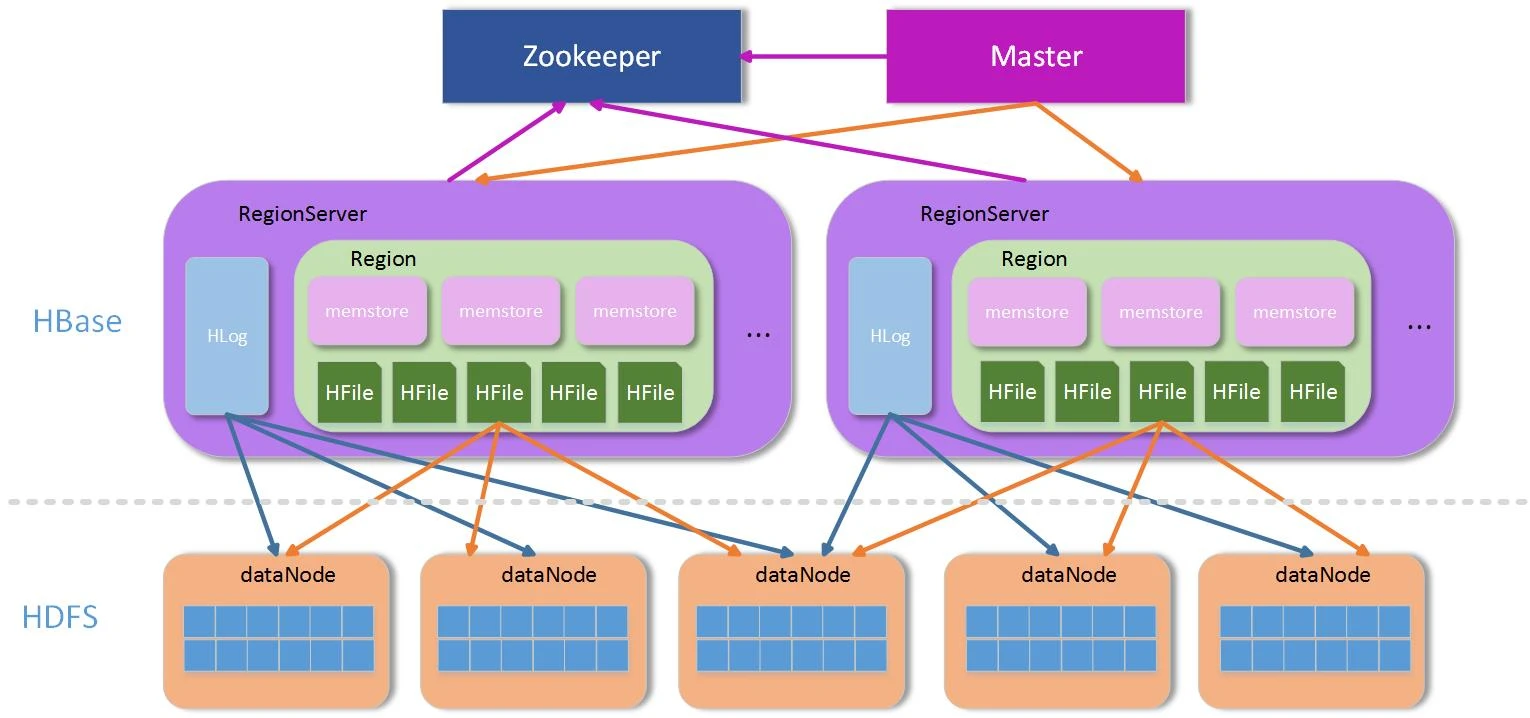

HBase - Hadoop Database,是一个高可靠性、高性能、面向列、可伸缩的分布式存储系统,利用HBase技术可在廉价PCServer上搭建起大规模结构化存储集群。HBase利用HadoopHDFS作为其文件存储系统,利用HadoopMapReduce来处理HBase中的海量数据,利用Zookeeper作为协调工具。

在本篇笔记之前,还有我写的这几篇,可以参阅一下:

Hadoop 2.x在CentOS 6.9和Windows下的伪分布式部署

HDFS的再探究

这代人的热情-MapReduce的工作原理学习(一)

这代人的热情-MapReduce的工作原理学习(二)

下面的内容引用了,写得很清晰,我就不额外写了:

【HBase】HBase的环境搭建及基本使用-魏晓蕾

顺便说一下这个博客的博主写得挺多的,有兴趣可以去看一下。

| 特点 | MySQL | HBase |

|---|---|---|

| 数据库的概念 | 有数据库的概念database和表的概念table | 没有数据库概念database,有替代的namespace的概念,有表的概念,所有的表都是在一个namespace下的 |

| 主键 | 主键唯一决定了一行 | 没有主键,但是有行健:rowkey(可以理解为就是相当于是主键) |

| 字段 | 表中直接包含字段 | 有列簇的概念,每个列簇中才包含了字段,给相同属性的列划分一个组,叫做column family列簇,在建表的时候至少要指明一个列簇,可以不给字段 |

| 版本 | 行列交叉得到一个唯一的单元格,数据的版本数只有1 | 可以存储多个版本version(相当于是可以存储多个值),HBase行列交叉得到一个唯一的单元格组,组中可以有多个单元格,可以设置hbase的version版本数,是int值,当version为1的时候,就没有单元格组的概念了,就是一个单元格,默认情况下,显示timestamp(时间戳)最新的那个单元格的值 |

| 空值 | MySQL中没有值的话,就是null值,是占空间的 | 对于HBase来说,如果没有这一列的信息,那么就不会存储,不会分配任何的空间 |

Hadoop的生态系统:

还需要说的概念就是:

主键: Row Key

主键是用来检索记录的主键,访问hbase table中的行,只有三种方式。

1.通过单个row key访问

2.通过row key的range

3.全表扫描

列族: Column Family

关系型数据库没有列族这一概念。列族在创建表的时候声明,一个列族可以包含多个列,列中的数据都是以二进制形式存在,没有数据类型。

1. HBase的安装

在安装之前,得先想一下,究竟使用何种部署方式,我们可以搭建单节点的测试环境:

1、单机单实例,只要端口不被占用即可

2、单机伪集群

部署的话要用到Zookeeper,在文末有提到。因为HBase可以将数据保存在本地的文件系统,不保存到HDFS,我们只是简单的测试学习的话,可以无需启动HDFS,只是HDFS的很多特性就无法使用。

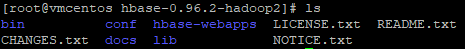

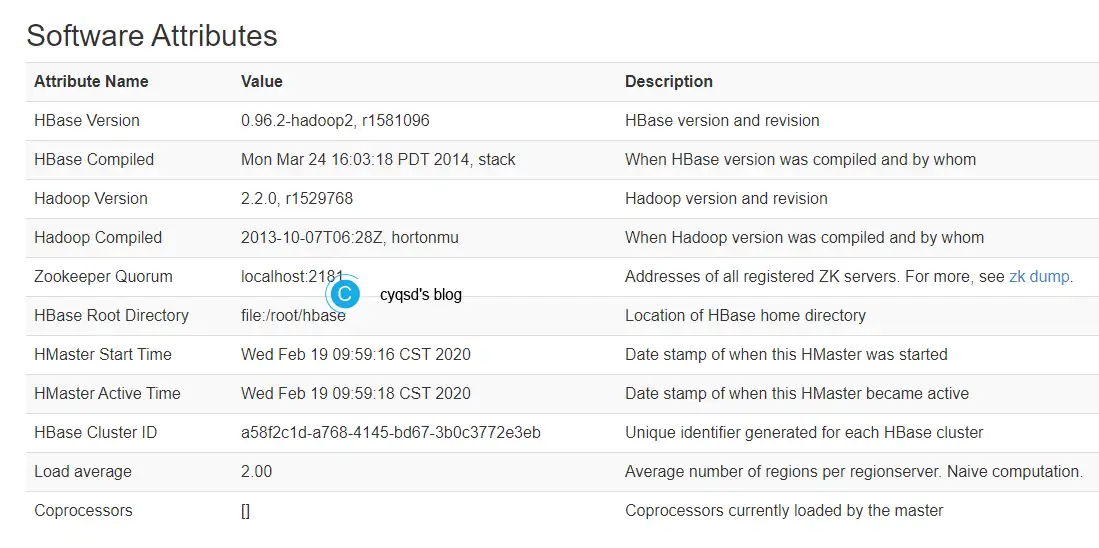

本文使用的版本是:hbase-0.96.2-hadoop2-bin.tar.gz,要注意和Hadoop的版本对应。使用的这个在很久以前就停止支持了。

- 0.96 was EOM'd September 2014; 0.94 and 0.98 were EOM'd April 2017

- 1.0 was EOM'd January 2016; 1.1 was EOM'd December 2017; 1.2 was EOM'd June 2019

- 2.0 was EOM'd September 2019

下面就开始捣鼓了,首先解压到目录:

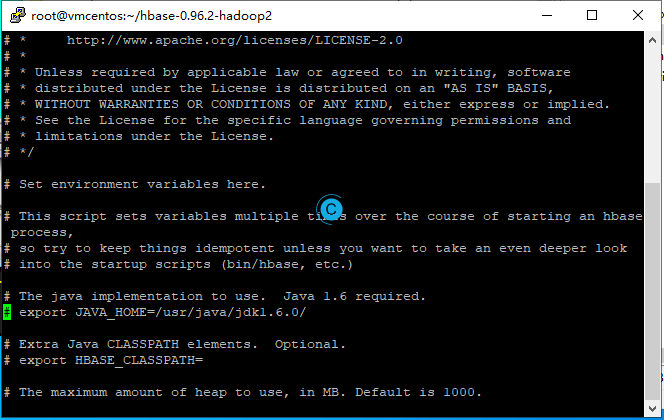

还是需要配置环境,编辑conf/hbase-env.sh

取消JAVA_HOME前面的

/usr/java/jdk1.7.0_80

然后:

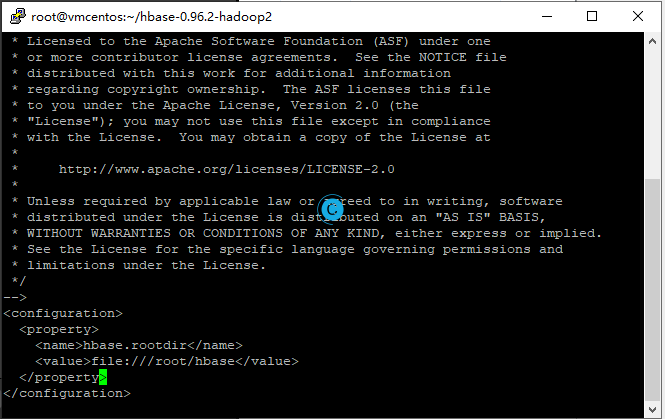

vi conf/hbase-site.xml

根据实际情况修改目录:

<property>

<name>hbase.rootdir</name>

<value>file:///DIRECTORY/hbase</value>

</property>

修改conf/hbase-env.sh中的HBASE_MANAGES_ZK为true。

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

# export HBASE_MANAGES_ZK=true

一个分布式运行的HBase依赖一个zookeeper集群。所有的节点和客户端都必须能够访问zookeeper。默认的情况下HBase会管理一个zookeep集群。这个集群会随着HBase的启动而启动。当然,你也可以自己管理一个zookeeper集群,但需要配置HBase。你需要修改conf/hbase-env.sh里面的HBASE_MANAGES_ZK 来切换。这个值默认是true的,作用是让HBase启动的时候同时也启动zookeeper.

然后修改:conf/hbase-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.123.29:8020/hbase</value>

</property>

<property >

<name>hbase.tmp.dir</name>

<value>/opt/moduels/hbase-0.96.2-hadoop2/data/tmp</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>192.168.123.29</value>

</property>

</configuration>

其中,hbase.cluster.distributed,true就是分布式集群模式,false就是单机模式。

然后就可以启动了,./start-hbase.sh。

[root@vmcentos bin]# ./start-hbase.sh

starting master, logging to /root/hbase-0.96.2-hadoop2/bin/../logs/hbase-root-ma ster-vmcentos.out

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/hbase-0.96.2-hadoop2/lib/slf4j-log4j12-1 .6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/hadoop-2.4.1/share/hadoop/common/lib/slf4j-lo g4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

会提示log4j的警告,可以忽略。

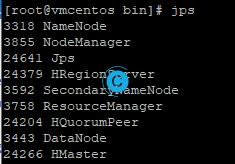

通过JPS可以看到启动情况,进程变得更多了。

3318 NameNode

3855 NodeManager

24641 Jps

24379 HRegionServer

3592 SecondaryNameNode

3758 ResourceManager

24204 HQuorumPeer

3443 DataNode

24266 HMaster

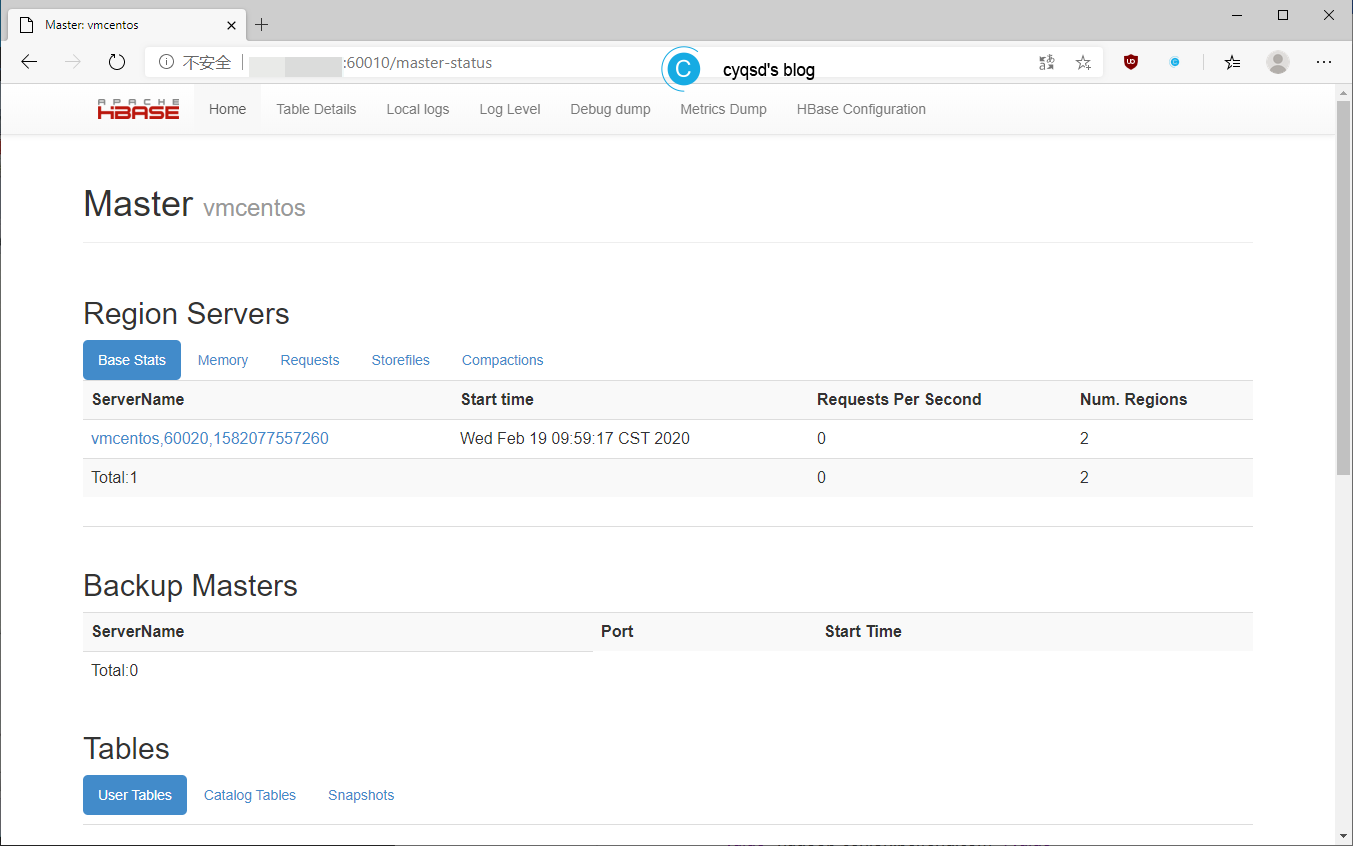

网页端的图形界面也看得到了。

这个时候就已经运行起来了,我们可以对hbase进行操作了。进入bin目录,./hbase。

[root@vmcentos bin]# ./hbase

Usage: hbase [<options>] <command> [<args>]

Options:

--config DIR Configuration direction to use. Default: ./conf

--hosts HOSTS Override the list in 'regionservers' file

Commands:

Some commands take arguments. Pass no args or -h for usage.

shell Run the HBase shell

hbck Run the hbase 'fsck' tool

hlog Write-ahead-log analyzer

hfile Store file analyzer

zkcli Run the ZooKeeper shell

upgrade Upgrade hbase

master Run an HBase HMaster node

regionserver Run an HBase HRegionServer node

zookeeper Run a Zookeeper server

rest Run an HBase REST server

thrift Run the HBase Thrift server

thrift2 Run the HBase Thrift2 server

clean Run the HBase clean up script

classpath Dump hbase CLASSPATH

mapredcp Dump CLASSPATH entries required by mapreduce

version Print the version

CLASSNAME Run the class named CLASSNAME

基本的命令就出来了。

2. HBase的基本使用

在前面的命令中,加入shell 就是Run the HBase shell。

在bin目录下,用./hbase shell

HBase将操作命令分为了几组,分别是:

COMMAND GROUPS:

Group name: general

Commands: status, table_help, version, whoami

Group name: ddl

Commands: alter, alter_async, alter_status, create, describe, disable, disable_all, drop, drop_all, enable, enable_all, exists, get_table, is_disabled, is_enabled, list, show_filters

Group name: namespace

Commands: alter_namespace, create_namespace, describe_namespace, drop_namespace, list_namespace, list_namespace_tables

Group name: dml

Commands: count, delete, deleteall, get, get_counter, incr, put, scan, truncate, truncate_preserve

Group name: tools

Commands: assign, balance_switch, balancer, catalogjanitor_enabled, catalogjanitor_run, catalogjanitor_switch, close_region, compact, flush, hlog_roll, major_compact, merge_region, move, split, trace, unassign, zk_dump

Group name: replication

Commands: add_peer, disable_peer, enable_peer, list_peers, list_replicated_tables, remove_peer

Group name: snapshot

Commands: clone_snapshot, delete_snapshot, list_snapshots, rename_snapshot, restore_snapshot, snapshot

Group name: security

Commands: grant, revoke, user_permission

其中DDL(Data Definition Language 数据定义语言)和DML(Data Manipulation Language 数据操控语言)的区别可以看下面的链接:

https://www.cnblogs.com/kawashibara/p/8961646.html

当然可以对表和数据进行修改,我就不多写了。官方文档里面有1.2.3. Shell 练习。

用shell连接你的HBase

$ ./bin/hbase shell

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version: 0.90.0, r1001068, Fri Sep 24 13:55:42 PDT 2010

hbase(main):001:0>

输入 help 然后

创建一个名为 test 的表,这个表只有一个 列族 为 cf。可以列出所有的表来检查创建情况,然后插入些值。

hbase(main):003:0> create 'test', 'cf'

0 row(s) in 1.2200 seconds

hbase(main):003:0> list 'table'

test

1 row(s) in 0.0550 seconds

hbase(main):004:0> put 'test', 'row1', 'cf:a', 'value1'

0 row(s) in 0.0560 seconds

hbase(main):005:0> put 'test', 'row2', 'cf:b', 'value2'

0 row(s) in 0.0370 seconds

hbase(main):006:0> put 'test', 'row3', 'cf:c', 'value3'

0 row(s) in 0.0450 seconds

以上我们分别插入了3行。第一个行key为row1, 列为 cf:a, 值是 value1。HBase中的列是由 列族前缀和列的名字组成的,以冒号间隔。例如这一行的列名就是a.

检查插入情况.

Scan这个表,操作如下

hbase(main):007:0> scan 'test'

ROW COLUMN+CELL

row1 column=cf:a, timestamp=1288380727188, value=value1

row2 column=cf:b, timestamp=1288380738440, value=value2

row3 column=cf:c, timestamp=1288380747365, value=value3

3 row(s) in 0.0590 seconds

Get一行,操作如下

hbase(main):008:0> get 'test', 'row1'

COLUMN CELL

cf:a timestamp=1288380727188, value=value1

1 row(s) in 0.0400 seconds

disable 再 drop 这张表,可以清除你刚刚的操作

hbase(main):012:0> disable 'test'

0 row(s) in 1.0930 seconds

hbase(main):013:0> drop 'test'

0 row(s) in 0.0770 seconds

关闭shell

hbase(main):014:0> exit

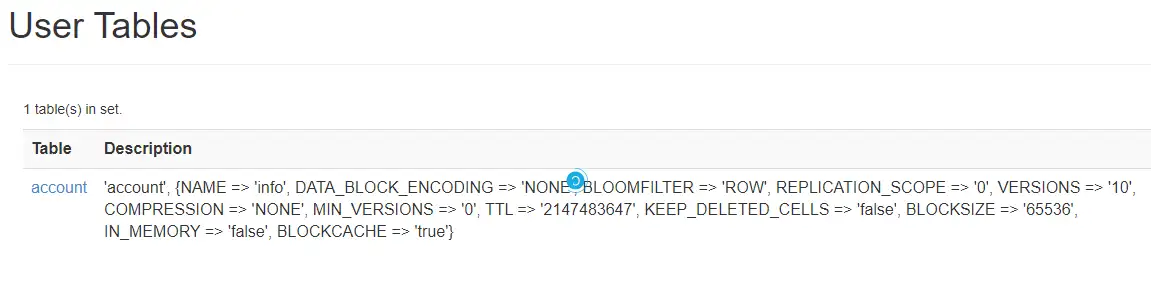

3. 使用Java操作HBase

光用shell来操作太麻烦,我们还是得使用Java来控制,就和我们用Mysql一样,这样就方便多了。该导包的导包。

public static void main(String[] args) throws Exception {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.123.29:2181");

HBaseAdmin admin = new HBaseAdmin(conf);

HTableDescriptor td = new HTableDescriptor("account");

HColumnDescriptor cd = new HColumnDescriptor("info");

cd.setMaxVersions(10);

td.addFamily(cd);

admin.createTable(td);

admin.close();

}

有时候会报下面的错误:

Exception in thread "main" java.lang.IllegalArgumentException: Not a host:port pair: PBUF

可以看这个:hbase Not a host:port pair: PBUF

下面是运行日志:

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:host.name=DESKTOP-47RU81S.lan

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.version=1.7.0_80

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.vendor=Oracle Corporation

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.home=C:\Program Files\Java\jdk1.7.0_80\jre

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.class.path=

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.library.path=INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.compiler=<NA>

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.name=Windows 8.1

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.arch=amd64

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.version=6.3

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.name=Administrator

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.home=C:\Users\Administrator

INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.dir=C:\Users\Administrator\IdeaProjects\untitled3

INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=192.168.123.29:2181 sessionTimeout=90000 watcher=hconnection-0x3f19c149, quorum=192.168.123.29:2181, baseZNode=/hbase

INFO [main] zookeeper.RecoverableZooKeeper (RecoverableZooKeeper.java:<init>(120)) - Process identifier=hconnection-0x3f19c149 connecting to ZooKeeper ensemble=192.168.123.29:2181

INFO [main-SendThread(vmcentos:2181)] zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(966)) - Opening socket connection to server vmcentos/192.168.123.29:2181. Will not attempt to authenticate using SASL (unknown error)

INFO [main-SendThread(vmcentos:2181)] zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(849)) - Socket connection established to vmcentos/192.168.123.29:2181, initiating session

INFO [main-SendThread(vmcentos:2181)] zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1207)) - Session establishment complete on server vmcentos/192.168.123.29:2181, sessionid = 0x1705b2baace000c, negotiated timeout = 90000

INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - hadoop.native.lib is deprecated. Instead, use io.native.lib.available

INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=192.168.123.29:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x3f19c149, quorum=192.168.123.29:2181, baseZNode=/hbase

INFO [main] zookeeper.RecoverableZooKeeper (RecoverableZooKeeper.java:<init>(120)) - Process identifier=catalogtracker-on-hconnection-0x3f19c149 connecting to ZooKeeper ensemble=192.168.123.29:2181

INFO [main-SendThread(vmcentos:2181)] zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(966)) - Opening socket connection to server vmcentos/192.168.123.29:2181. Will not attempt to authenticate using SASL (unknown error)

INFO [main-SendThread(vmcentos:2181)] zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(849)) - Socket connection established to vmcentos/192.168.123.29:2181, initiating session

INFO [main-SendThread(vmcentos:2181)] zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1207)) - Session establishment complete on server vmcentos/192.168.123.29:2181, sessionid = 0x1705b2baace000d, negotiated timeout = 90000

INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x1705b2baace000d closed

INFO [main-EventThread] zookeeper.ClientCnxn (ClientCnxn.java:run(509)) - EventThread shut down

INFO [main] client.HConnectionManager$HConnectionImplementation (HConnectionManager.java:closeMasterService(2122)) - Closing master protocol: MasterService

INFO [main] client.HConnectionManager$HConnectionImplementation (HConnectionManager.java:closeZooKeeperWatcher(1798)) - Closing zookeeper sessionid=0x1705b2baace000c

INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x1705b2baace000c closed

INFO [main-EventThread] zookeeper.ClientCnxn (ClientCnxn.java:run(509)) - EventThread shut down

Process finished with exit code 0

在网页端也能看见修改。

4. HBase的架构(简要)

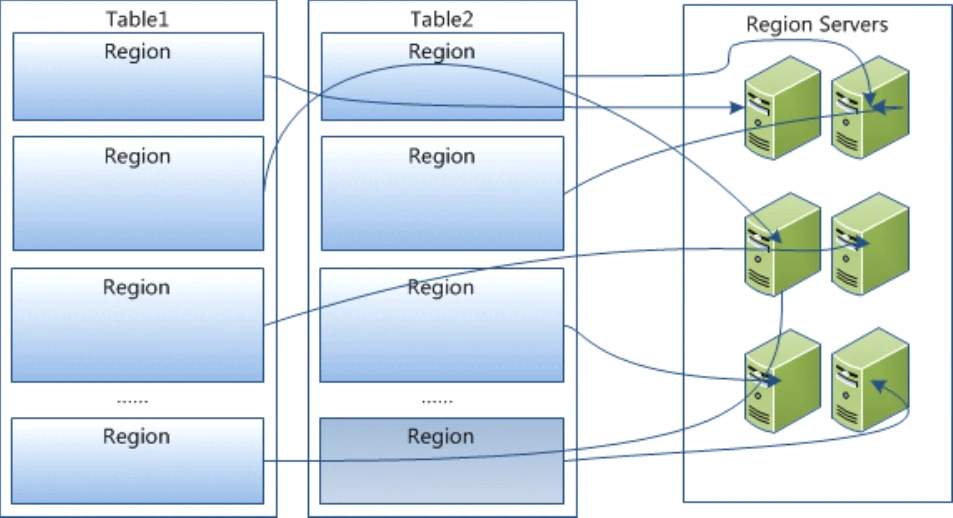

region是分布式储存和负载均衡的最小单元。

HRegionServer用来存放region的,可以认为是放着内存中的,每隔一段时间会将数据写入到HDFS中。

Table 在行的方向上分割为多个HRegion,一个region由[startkey,endkey)表示,每个HRegion分散在不同的RegionServer中。

还有一些重要的概念:

HMaster

HStore

HLog

有兴趣的可以学习一下。

5. 其他

在本文的后面补充了两个东西,分别是sqoop和Zookeeper,放在最后并不是因为不重要。

5.1. Zookeeper

我也不多写了。

原创ZooKeeper入门实战教程(一)-介绍与核心概念

官方文档里面有:

- ZooKeeper->16.1. 和已有的ZooKeeper一起使用 16.2. 通过ZooKeeper 的SASL 认证

5.2. sqoop

Sqoop是一款用来在不同数据存储软件之间进行数据传输的开源软件,它支持多种类型的数据储存软件。

D:\sqoop-1.4.4.bin__hadoop-2.0.4-alpha\bin>sqoop

Warning: HBASE_HOME and HBASE_VERSION not set.

Warning: HBASE_HOME does not exist HBase imports will fail.

Please set HBASE_HOME to the root of your HBase installation.

Try 'sqoop help' for usage.

D:\sqoop-1.4.4.bin__hadoop-2.0.4-alpha\bin>sqoop help

Warning: HBASE_HOME and HBASE_VERSION not set.

Warning: HBASE_HOME does not exist HBase imports will fail.

Please set HBASE_HOME to the root of your HBase installation.

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

本作品采用 知识共享署名-相同方式共享 4.0 国际许可协议 进行许可。